Action Segmentation with Mixed Temporal Domain Adaptation

Abstract

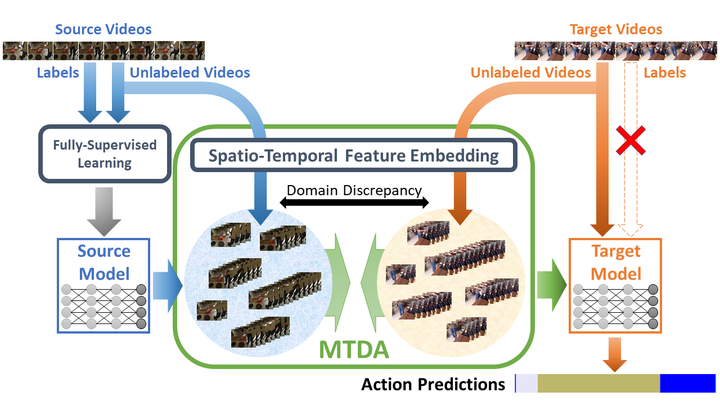

The main progress for action segmentation comes from densely-annotated data for fully-supervised learning. Since manual annotation for frame-level actions is time-consuming and challenging, we propose to exploit auxiliary unlabeled videos, which are much easier to obtain, by shaping this problem as a domain adaptation (DA) problem. Although various DA techniques have been proposed in recent years, most of them have been developed only for the spatial direction. Therefore, we propose Mixed Temporal Domain Adaptation (MTDA) to jointly align frame-and video-level embedded feature spaces across domains, and further integrate with the domain attention mechanism to focus on aligning the frame-level features with higher domain discrepancy, leading to more effective domain adaptation. Finally, we evaluate our proposed methods on three challenging datasets (GTEA, 50Salads, and Breakfast), and validate that MTDA outperforms the current state-of-the-art methods on all three datasets by large margins (e.g. 6.4% gain on F1@50 and 6.8% gain on the edit score for GTEA).

Videos

Resources

Other Links:

Citation

Min-Hung Chen, Baopu Li, Yingze Bao, and Ghassan AlRegib, “Action Segmentation with Mixed Temporal Domain Adaptation”, IEEE Winter Conference on Applications of Computer Vision (WACV), 2020.

BibTex

@inproceedings{chen2020mixed,

title={Action Segmentation with Mixed Temporal Domain Adaptation},

author={Chen, Min-Hung and Li, Baopu and Bao, Yingze and AlRegib, Ghassan},

booktitle={IEEE Winter Conference on Applications of Computer Vision (WACV)},

year={2020}

}

Members

1Georgia Institute of Technology 2Baidu USA

*work done during an internship at Baidu USA

|  |  |  |

|---|