Interpretable Self-Attention Temporal Reasoning for Driving Behavior Understanding

Abstract

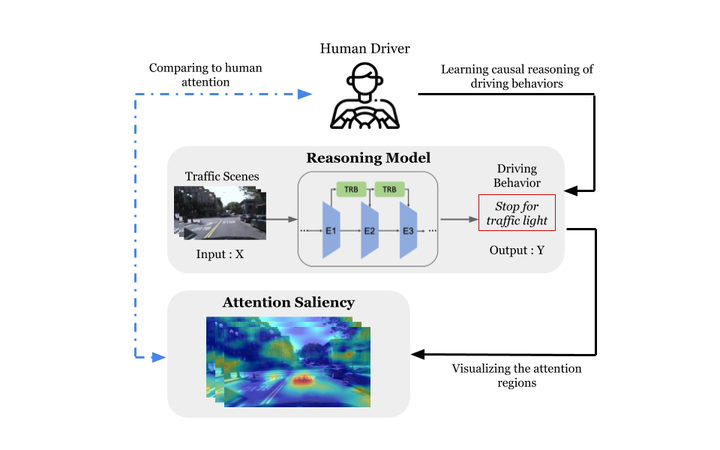

Performing driving behaviors based on causal reasoning is essential to ensure driving safety. In this work, we investigated how state-of-the-art 3D Convolutional Neural Networks (CNNs) perform on classifying driving behaviors based on causal reasoning. We proposed a perturbation-based visual explanation method to inspect the models’ performance visually. By examining the video attention saliency, we found that existing models could not precisely capture the causes (e.g., traffic light) of the specific action (e.g., stopping). Therefore, the Temporal Reasoning Block (TRB) was proposed and introduced to the models. With the TRB models, we achieved the accuracy of 86.3%, which outperform the state-of-the-art 3D CNNs from previous works. The attention saliency also demonstrated that TRB helped models focus on the causes more precisely. With both numerical and visual evaluations, we concluded that our proposed TRB models were able to provide accurate driving behavior prediction by learning the causal reasoning of the behaviors.

Resources

Other Links:

Citation

Yi-Chieh Liu*, Yung-An Hsieh*, Min-Hung Chen, Chao-Han (Huck) Yang, Jesper Tegner, and Yi-Chang (James) Tsai, “Interpretable Self-Attention Temporal Reasoning for Driving Behavior Understanding”, IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2020. (*equal contribution)

BibTex

@inproceedings{liu2020interpretable,

title={Interpretable Self-Attention Temporal Reasoning for Driving Behavior Understanding},

author={Liu, Yi-Chieh and Hsieh, Yung-An and Chen, Min-Hung and Yang, C-H Huck and Tegner, Jesper and Tsai, Y-C James},

booktitle={IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP)},

year={2020}

}

Members

1Georgia Institute of Technology 2KAUST

|  |  |  |  |  |

|---|