About Me

My name is Min-Hung (Steve) Chen (陳敏弘 in Chinese). I am a Senior Research Scientist at NVIDIA Research Taiwan, working on Vision+X Multimodal AI. I received my Ph.D. degree from Georgia Tech, advised by Prof. Ghassan AlRegib and in collaboration with Prof. Zsolt Kira. Before joining NVIDIA, I was working on Biometric Research for Cognitive Services as a Research Engineer II at Microsoft Azure AI, and was working on Edge-AI Research as a Senior AI Engineer at MediaTek, respectively.

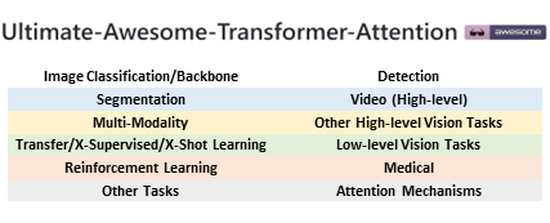

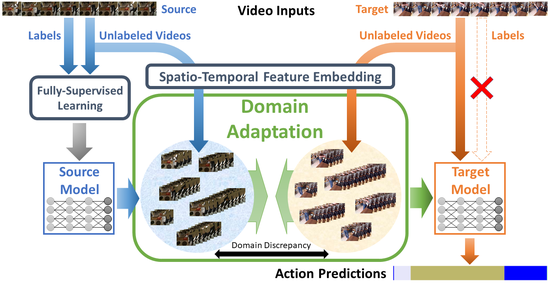

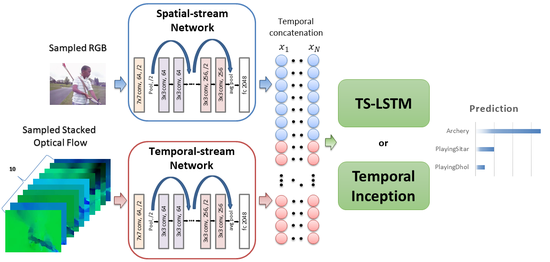

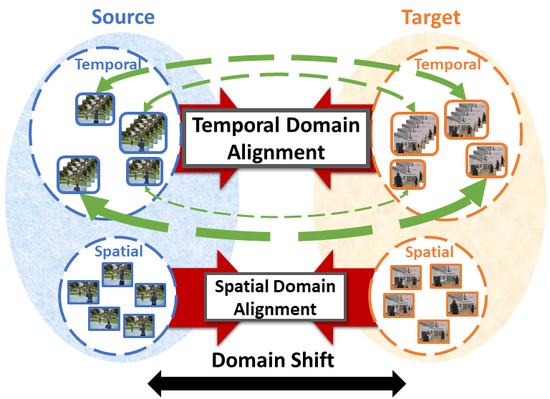

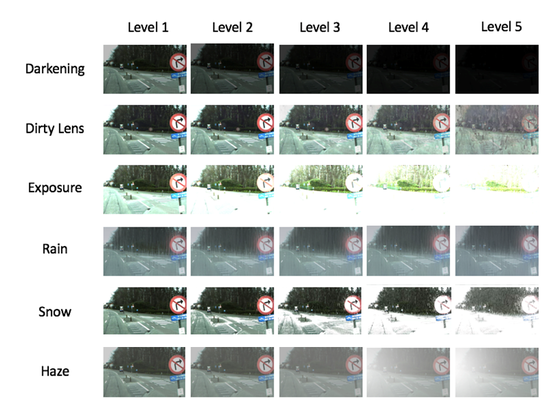

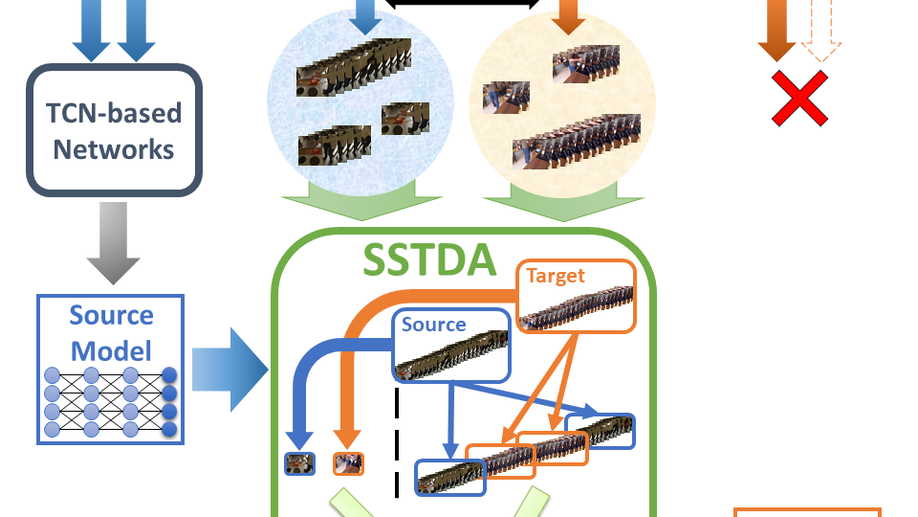

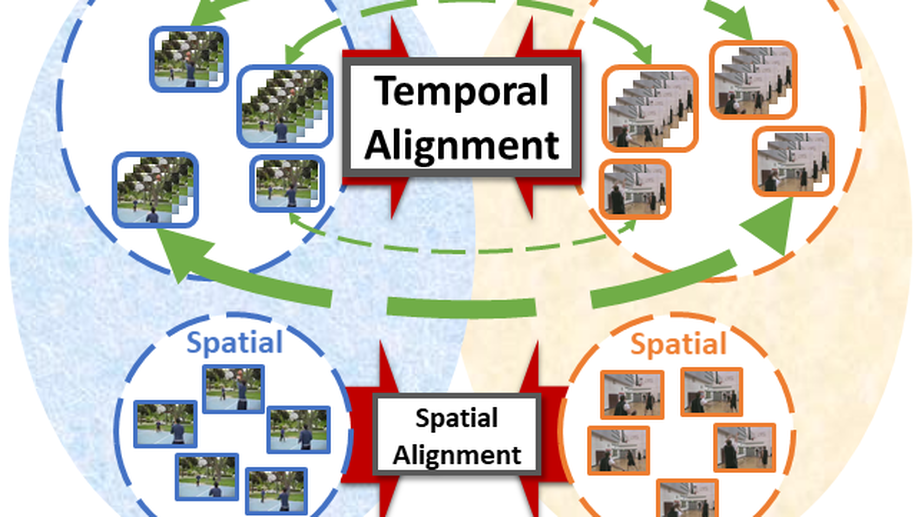

My research interest is mainly Multimodal AI, including Vision-Language, 4D (video+depth) Understanding, Efficient Deep Learning, VLA, and Transformer. I am also interested in Learning without Fully Supervision, including domain adaptation, transfer learning, continual learning, X-supervised learning, etc.

[Recruiting] NVIDIA Taiwan is hiring Research Scientist (fulltime & internship). I am also open to research collaboration. Please drop me an email if you are interested in.

[Note] The Projects, Talks, and Publications Sections are out of date. Please mainly check the News Section.

Interests

- Transfer Learning

- Unsupervised Learning

- Video Understanding

- Vision Transformer

- Computer Vision

- Deep Learning

- Machine Learning

Education

PhD in Electrical and Computer Engineering, 2020

Georgia Institute of Technology

MSc in Integrated Circuits and Systems, 2012

National Taiwan University

BSc in Electrical Engineering, 2010

National Taiwan University